Mastering Llama 3.3 – A Deep Dive into Running Local LLMs

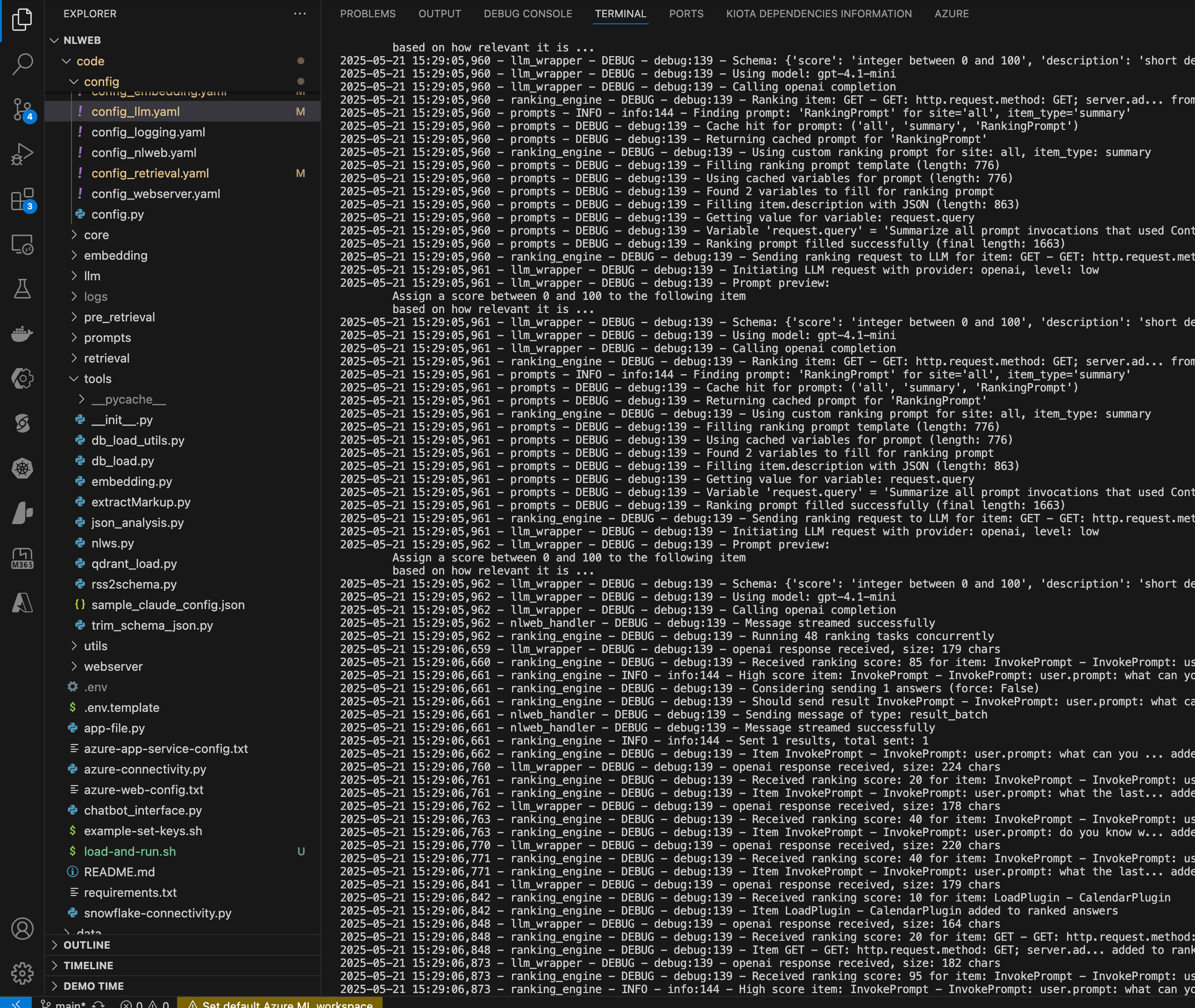

I compared Llama 3.3 with older models like 3.170B and Phi 3, tested Microsoft Graph API integrations using OpenAPI specs, and explored function calling quirks. The results? Not all models are created equal!